Why Our Human-Centric Approach to AI Upskilling Beat the Tech Hype (and Scored 9.7/10 NPS)

- Jamie Bykov-Brett

- Nov 28, 2025

- 10 min read

“Which AI tools should we get?”

That’s the opening line in most conversations I have with leaders. Budgets are ready, vendors are circling, and everyone hopes the “right” platform will magically unlock AI value.

These days, I give a very consistent reply:

“Before we talk tools, tell me this: What do you actually want to happen at the end? What value do you want AI to create?”

Silence. Then the cogs start turning.

Because AI is fundamentally intent-driven. If we don’t know what we want, no tool can give it to us.

Recently I had the chance to prove this, properly, with data.

Over six months, I ran an AI Upskills programme with Insights – participants were mostly in roles in learning experience design, not from tech teams. We met for just under over an 1h a week, split over two sessions, and we designed the whole thing around human skills and clear intent, not around specific tools.

By the time we wrapped:

→ Confidence choosing the right AI tool had more than doubled

→ 86% of participants were using AI daily (up from 57%)

→ Most were saving 2–4 hours per week

→ The programme scored a 9.7/10 Net Promoter Score

We didn’t get there by teaching people which buttons to press. We got there by helping them become clearer, braver and more creative in how they think.

AI Is Intent-Driven (and That Changes Everything)

The whole point of generative AI is that it erodes the technical skills required to operate digital tools. You no longer need to know how to code a model or configure an API to get enormous value.

What you do need is:

→ Clarity about the outcome you want

→ Curiosity to explore different approaches

→ Creativity in how you express ideas

→ Critical thinking to check, refine & challenge outputs

→ Communication & collaboration to bring others along

We spent a lot of time in this cohort talking about that shift:

Expertise is now on demand. If something is repetitive, rules-based or pattern-heavy, machines will do it better.

Our edge is how we frame problems, set intent and shape value.

One of my favourite moments was when a participant didn’t type a prompt at all.

They drew what they wanted – a rough sketch of an infographic – snapped a picture, and asked the AI to turn that drawing into the professional visual they had in mind. No immaculate prompt. Just clear intent, expressed in their natural way.

Another time, I demonstrated how to mobilise an AI agent to use other AI tools to achieve a goal: asking a language model to design a workflow, delegate pieces of work to specialised tools, then orchestrate the results back into a final output. Humans held the steering wheel; AI did the heavy lifting.

We worked through practical examples like this every week. By the end, we were tackling tasks that would previously have taken weeks across the team and achieving the intended output in about 30 minutes – often with time to spare.

That’s the real potential: not replacing human input, but augmenting human capability.

A Different Kind of AI Programme

This was not a standard “here’s how to use the tool safely” training.

Key design choices:

→ Duration: 75 minutes a week for six months

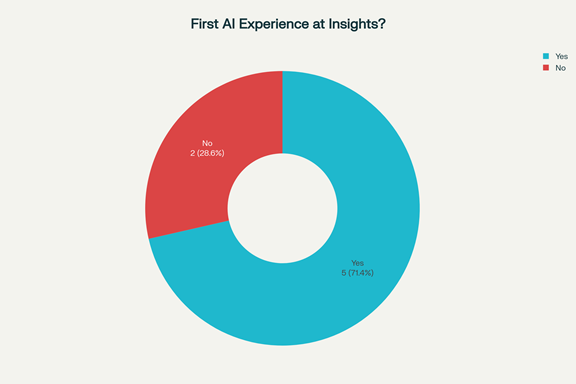

→ Participants: 71% had never had formal AI learning at work

Curriculum highlights:

→ Supporting digital transformation

→ From industrial to digital mindset

→ AI governance & risk

→ Building accessible & inclusive products

→ Identifying AI use cases in real workflows

→ How generative AI works (without the maths headache, you don’t need to understand email protocols to send an email)

→ Public narrative & storytelling with AI (storytelling is a human superpower; clear narrative helps you get better results from AI)

Sessions were deliberately built around creativity, critical thinking, communication, collaboration and curiosity. We educated people as human beings, not as machines. The emphasis wasn’t on retaining and reciting content, but on problem-solving and conversation.

The fact that these skills also make you dramatically better at using AI is the whole point – they’re simply more valuable in a digital economy because they’re so hard for machines to replicate.

Crucially, this programme only existed because a leader was willing to back an unusual approach. Tanya Boyd gave me the trust and flexibility to design a curriculum that went well beyond security, prompt engineering and tool selection. She greenlit something that was, frankly, a little bit out there for a corporate AI initiative.

The aim was never “everyone must become a prompt engineer”. The aim was to give people the human-centred skills they need to thrive in the future of work, whatever tools come next.

What’s hot today might not be hot tomorrow – but taking an intent-driven approach to work will always be key to leveraging AI.

How We Measured the Impact

I’m proud of the ideas, but I’m prouder that we put them to the test.

Participants rated their own confidence and agreement with specific statements on a 1–6 scale.

Things like:

→ “I feel confident choosing the right AI tool for a task.”

→ “I have clarity on 2–3 tasks I could streamline with AI.”

→ “I understand where AI can add value versus create risk.”

→ “AI feels approachable & easy to experiment with.”

After five months, they looked back and rated where they are now against where they were at the start. We also asked about:

→ How often they now use AI

→ How much time they save each week

→ Their intention to apply AI use cases in the next 30 days

→ How likely they were to recommend the sessions to a colleague

So every chart you’ll see referenced below – from “Confidence Metrics: Before vs After” to “Intention & Behaviour Change” – comes from participants assessing their own confidence, understanding and behaviour before and after the programme.

This wasn’t my opinion. It was their lived experience.

Six Numbers That Tell the Story

9.7/10 Net Promoter Score – people weren’t just content; they were highly likely to recommend the programme.

115% increase in confidence choosing the right AI tool – average scores went from 1.86 to 4.00 out of 6.

86% now use AI daily (up from 57%) – AI moved from theory to daily practice.

Most participants save 2–4 hours every week using AI – and 86% report saving at least 2 hours weekly.

A 76% jump in seeing AI as relevant across most job roles – a shift from “AI is for tech people” to “AI is for my work”.

88% strongly agreed their organisation was proactively supporting their personal development & future skills..

And underneath those six headline stats, there’s a deeper story.

Confidence and Core Skills: People Took the Wheel

We saw significant improvements across all the core skills we measured:

→ Confidence using AI tools in their role

→ Confidence writing prompts & instructions

→ Confidence reviewing AI outputs for accuracy & bias

→ Confidence explaining AI concepts to colleagues

The “Confidence Metrics: Before vs After” chart shows this clearly.

Those gains didn’t come from memorising prompt templates. They came from practising intent:

→ What am I actually trying to achieve?

→ Who is this for, & what do they care about?

→ How will I know if the AI’s answer is good enough or safe enough?

Once people can answer those questions, the prompts almost write themselves.

Understanding AI in Context: From Hype to Judgement

In the “AI Understanding: Before vs After” chart, the biggest jumps are in:

→ Understanding how AI affects their role & team

→ Understanding where AI adds value versus creates risk

→ Awareness of data privacy, security & compliance

The “AI Understanding: Before vs After” chart visualises this shift.

We weren’t trying to turn everyone into AI ethicists. I wanted everyday decision-makers to know when to say:

→ “Yes, this is a great fit for AI.”

→ “Not yet, we need better safeguards.”

→ “Absolutely not, this one stays human.”

That shift from vague hype to grounded judgement is a huge part of responsible adoption.

Productivity, Clarity and Time Saved

Generative AI is ruthlessly intent-driven. If you don’t know what you’re asking for, it will happily give you beautiful nonsense.

So we spent a lot of time on task clarity:

→ Breaking work down into steps

→ Separating thinking work from formatting work

→ Asking: “Where exactly could AI help here?”

In the “Productivity & Impact: Before vs After” chart, you can see big lifts in:

→ Belief that AI makes their work more efficient

→ Clarity on 2–3 tasks they will streamline with AI

→ Confidence integrating AI into daily workflows

→ Seeing AI as more of an opportunity than a risk

And then the punchline: the “Estimated Time Saved Per Week Using AI” chart, where most participants report saving 2–4 hours every week, and a few are saving even more.

Those aren’t mythical “consulting hours”; they’re real, person-reported gains.

Quality, Creativity and Problem-Solving

One of my priorities was to show that AI isn’t just a faster way to type. Used well, it’s a powerful thinking partner.

In the “Quality & Creativity: Before vs After” chart, we saw:

→ A 100% increase in using AI for creative idea generation

→ Strong gains in using AI to plan & structure projects

→ Improved quality of written & analytical work

→ Higher confidence in summarising & analysing information with AI

→ Better support for evidence-based decision making

We did live experiments:

→ Turning half-formed ideas into structured plans

→ Asking AI to critique our own thinking, research hunches & develop strategies

→ Using AI to generate multiple perspectives before making a decision

Participants weren’t outsourcing their judgement. They were using AI to widen and sharpen it.

Ethics, Accessibility and Inclusion – Baked In, Not Bolted On

A lot of AI programmes touch ethics on the last slide. We built it into the spine of the curriculum.

The “Ethical Use & Accessibility: Before vs After” chart shows:

→ Increased awareness of harms like bias, exclusion & misinformation

→ More confidence applying fairness, accountability & transparency principles

→ Stronger awareness of accessibility requirements

→ Higher agreement with designing content & services with inclusion in mind

We framed ethics not as a brake, but as a way to build trustworthy systems that actually work for more people.

Collaboration and Culture: From Quiet Anxiety to Open Experimentation

AI can be a lonely topic. People worry about sounding foolish, or “using it wrong”.

The “Collaboration & Culture: Before vs After” chart tells a different story by the end of the programme:

→ People felt more comfortable discussing AI with colleagues

→ Sharing AI tips & examples became normal

→ Comfort with experimenting & learning in public rose

→ Teams discussed AI use, risk & good practice more often

→ Perceived leadership support for responsible AI use increased

In other words, AI stopped being something people played with in secret and started becoming a shared, strategic conversation.

Learning Experience Quality and Future Intent

In “Learning Experience Quality”, participants gave high marks for:

→ Job relevance

→ Clarity of examples

→ Balance of concepts & practice

→ Interactivity, pace & structure

→ Whether the learning met or exceeded expectations

And in “Intention & Behaviour Change”, we saw strong agreement that:

→ They intend to apply specific AI use cases in the next 30 days

→ They expect to provide more value in their role

→ They expect to be more productive as a direct result of the sessions

→ Their organisation has taken a proactive approach to supporting their future skills

The “Top 10 Areas of Greatest Improvement” chart shows the biggest jumps in:

→ Understanding AI value vs risk

→ Using AI for creative generation

→ Improving written & analytical work

→ Seeing AI as relevant to all roles

→ Choosing the right AI tool

→ Task clarity & summarising information

Notice the pattern: these are human-centric skills amplified by AI, not narrow tool tricks.

Stories from the Room

The data is satisfying, but the human stories are what stay with me.

One participant’s feedback:

“Your input has been invaluable… the learning has been game-changing across the entire team and given me confidence to try, while still staying safe.”

Another said the sessions:

“Made a complex, overwhelming topic feel clear, practical and genuinely exciting.”

Another described how the weekly sessions:

“Shifted our mindset, inviting us to stretch our skills and expand our sense of what we could do as a team.”

And throughout, the thing I heard most was relief – relief that AI could be approached with curiosity, humour and honesty, not just pressure and jargon.

I played a small part in this. The real magic came from a team willing to show up every week, experiment together and be honest about their fears as well as their hopes.

Why This Matters for Leaders Right Now

Research from IBM, Deloitte & the World Economic Forum shows that the half-life of professional skills has fallen from around 10–15 years to about 5, & for technical skills to roughly 2.5 years. You would not want a surgeon who has not updated their skills since 2016, so why are we comfortable with the same in our own jobs?

Generative AI will keep changing. Today’s “must-have” tools will date quickly. New capabilities will appear faster than your procurement cycle. We’re moving towards a world where you won’t always need to buy applications – you’ll assemble and adapt what you need, tailor-built for your organisation, and rebuild it months later as your context evolves.

What doesn’t date is a workforce that is:

→ Clear about outcomes

→ Skilled at shaping intent

→ Curious & creative in exploring possibilities

→ Grounded in ethics, accessibility & inclusion

→ Confident enough to teach AI to use other AI tools, & to say “no” when it’s the wrong fit

That’s what we built with this programme. And, importantly, we did it with people in traditionally non-technical roles.

This is why I believe AI upskilling has to start from a human-centric lens:

When you invest in clarity, curiosity, creativity, communication and collaboration, AI becomes a force multiplier.

When you skip those and start with tools, AI becomes another expensive headache.

Three Questions to Take Back to Your Organisation

If you’re a senior leader wondering where to start, I’d invite you to take these into your next strategy conversation:

→ Do our people feel confident enough to experiment with AI, or are they quietly waiting for permission (or perfection)?

→ Where in our organisation is task clarity low, but expectations for AI impact high? Those are the danger zones for frustration.

→ What would it look like to invest in human-centric AI skills first, & let our tool choices follow?

That’s the space I work in: helping leaders and teams lead their organisations into the AI era with programmes that blend human psychology, technical depth and a relationship-first approach.

Insights were willing to pioneer something genuinely new – a long-term, human-centred AI upskilling journey that treated people as thinkers, not button-pushers. The AI Upskills report didn’t just show that it worked; it validated a philosophy:

The future of AI in your organisation is not about the tools you buy. It’s about the humans you develop and the clarity of intent you help them build.

When you start there, you don’t just keep up with the AI era. You help shape it.

.png)

Comments